Illustrative image

It must be said at the outset that what we currently call artificial intelligence is not in a position to take over the world. However, it is a tool that can do a lot of damage in the wrong hands. Machine learning systems are still simply machines without emotions without intent. And as such, it can be misused. What are the current threats?

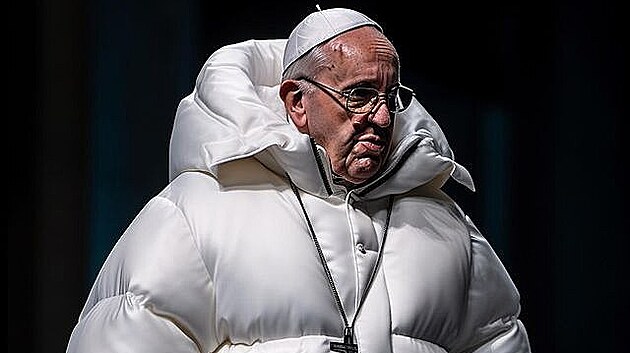

In general, it can be said that the biggest problem that can be caused by the so-called fake images, videos and sounds. It is determined by how we perceive the world and what we wish to believe. After all, the winged phrase “one picture is worth a thousand sentences” didn’t quite come out like that.

We have already learned that not everything said is true, that “paper can bear everything” and we are more careful in these cases, but with a picture, for example, we will be more inclined to believe that it is evidence of a particular case. Although there are very good fakes so far. But to be reliable, it took a long time to produce. However, the arrival and further improvement of generative AI will allow such falsifications to be made on the treadmill. The combination of the speed of creation, and therefore the quantity, and the ever-increasing perfection of the resulting photo montages makes these scams even more dangerous.

We can now calm down to find inconsistencies in the background or elsewhere upon closer inspection. But who has time these days to examine one image after another, especially when their tone matches our worldview.

Not so long ago, for example, we used to laugh at the output of image generation systems The number of fingers does not match , But through continuous learning, even this problem is gradually overcome by systems. No wonder some came across a photo in which the pope appeared in a luxurious vest, after all, who would investigate if there was something strange somewhere in the photo, if it was nothing significant.

And you don’t have to be just a fake dad, last week the AI won the Photographer contest with a fake photo. And no one recognized anything.

“Alcohol scholar. Twitter lover. Zombieaholic. Hipster-friendly coffee fanatic.”