In the professional segment, Nvidia’s high-performance offering is equipped with a Hopper H100 GPU. Depending on the variant (SMX5 card or PCIe card), this graphics chip includes 16,896 CUDA cores or 14,592 CUDA cores.

Either way, we have a 5120-bit memory interface in order to exploit a maximum of 80GB of HBM3 at 3 GHz (SXM5) or HBM2 at 2 GHz (PCIe). Both versions are using the same max capacity of 80 GB at the moment, and we’re defining it at the moment because according to a report this may change soon.

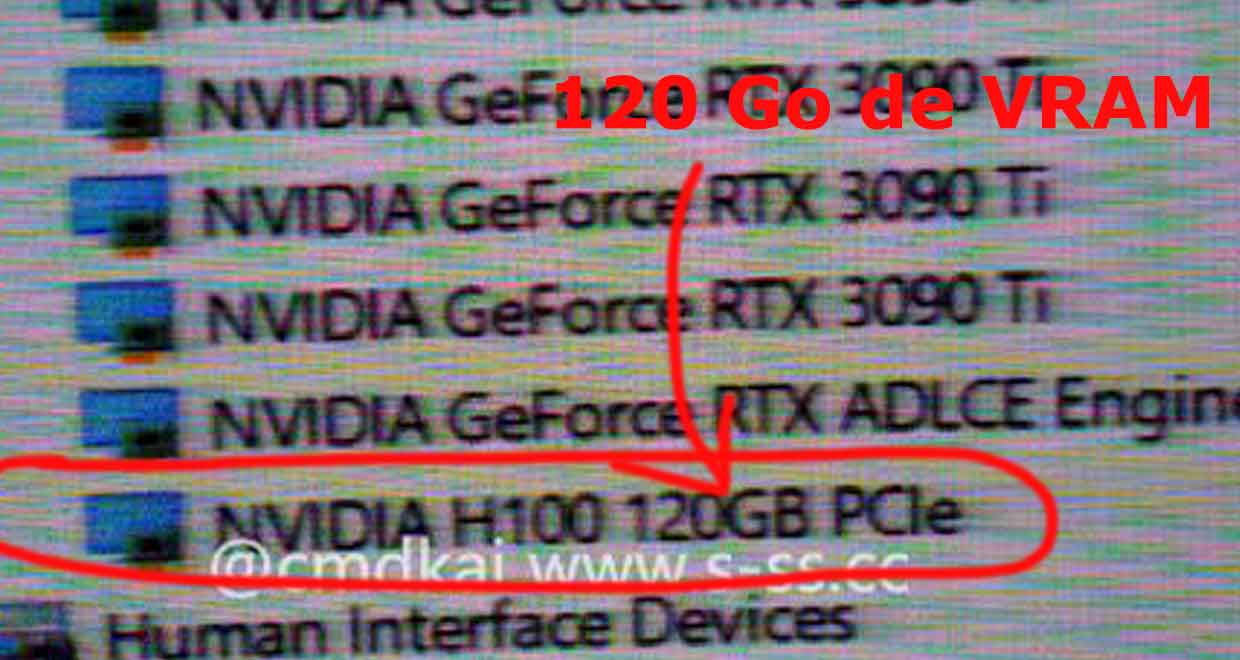

Nvidia will consider offering a PCIe version of GPU Hopper H100 Total 120 GB of video memory. On the other hand, we have no information on the nature of VRAM. According to the Chinese website s-ss.cc This new variant with 120GB of VRAM will be equipped with a full-power GH100 chip. It will obviously be fully unlocked.

This move to more VRAM is driven by the rapidly increasing volumes and complexity of HPC workloads. More VRAM becomes necessary to improve performance.

| H100 (SMX5) | H100 (PCIE) | A100 (SXM4) | A100 (PCIE4) | |

| GPUs | GH100 | GH100 | GA100 | GA100 |

| fineness of inscription | 4 nm | 4 nm | 7 nm | 7 nm |

| number of transistor | 80 billion | 80 billion | 54.2 billion | 54.2 billion |

| matrix area | 814 mm 2 | 814 mm 2 | 826 mm 2 | 826 mm 2 |

| SMS | 132 | 114 | 108 | 108 |

| TPCs | 66 | 57 | 54 | 54 |

| Cores CUDA FP32 | 16896 | 14,592 | 6912 | 6912 |

| CUDA FP64 cores | 16896 | 14,592 | 3,456 | 3,456 |

| basic tensioners | 528 | 456 | 432 | 432 |

| textile units | 528 | 456 | 432 | 432 |

| Frequency increase | ? | ? | 1410MHz | 1410MHz |

| memory interface | HBM3 5120 bit | HBM2e 5120 bit | HBM2e 6144 bit | HBM2e 6144 bit |

| Memory size | Up to 80GB HBM3 @ 3.0Gbps | Up to 80GB HBM2e @ 2.0Gbps | Up to 40 GB HBM2 @ 1.6 TB/sec Up to 80 GB HBM2 @ 1.6 TB/s |

Up to 40 GB HBM2 @ 1.6 TB/sec Up to 80 GB HBM2 @ 2.0 TB/s |

| L2 cache size | 51200 KB | 51200 KB | 40,960 KB | 40,960 KB |

| PDT | 700 watts | 350 watts | 400 watts | 250 watts |

“Proud thinker. Tv fanatic. Communicator. Evil student. Food junkie. Passionate coffee geek. Award-winning alcohol advocate.”